Dath | Dah

Exploration into machine learning and voice triggered AI

Partners

GUIR

Glasgow Life

Royal Conservotiore of Scotland

The Merchant City Festival

Challenge

Dath | Dah was a commission for Glasgow Life’s new Gaelic arts programme GUIR, which provides artists with opportunities to create new and challenging works exploring Gaelic culture across different art forms. Taking the lead on the project was our producer Lauren Glasgow, a first generation urban Gaelic speaker keen to explore how immersive media can bridge the gap between Gaelic and the mainstream.

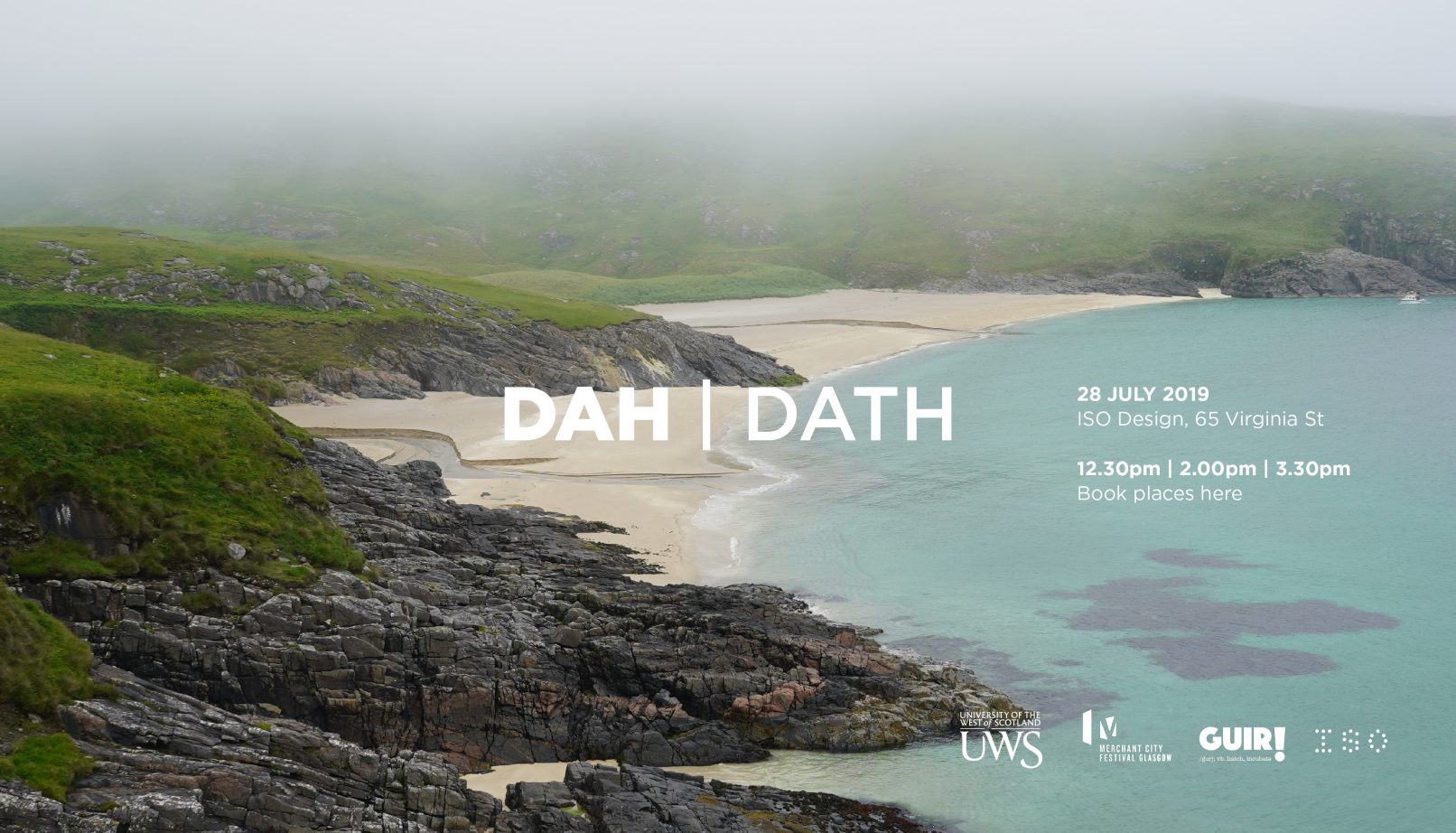

Developed out of residencies and site visits to remote Scottish islands, our intention was to create a machine learning system that would engage the non-Gaelic speaking audience while mimicking natural language progression.

Process

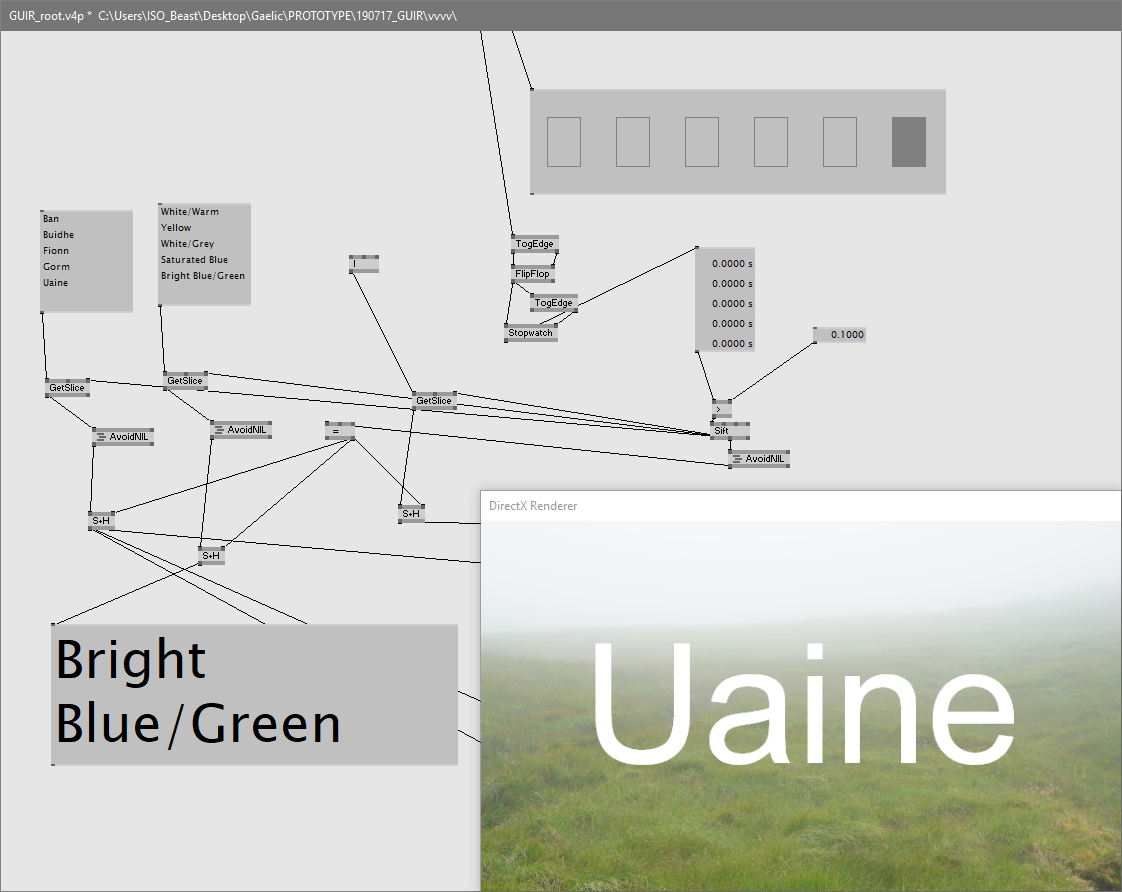

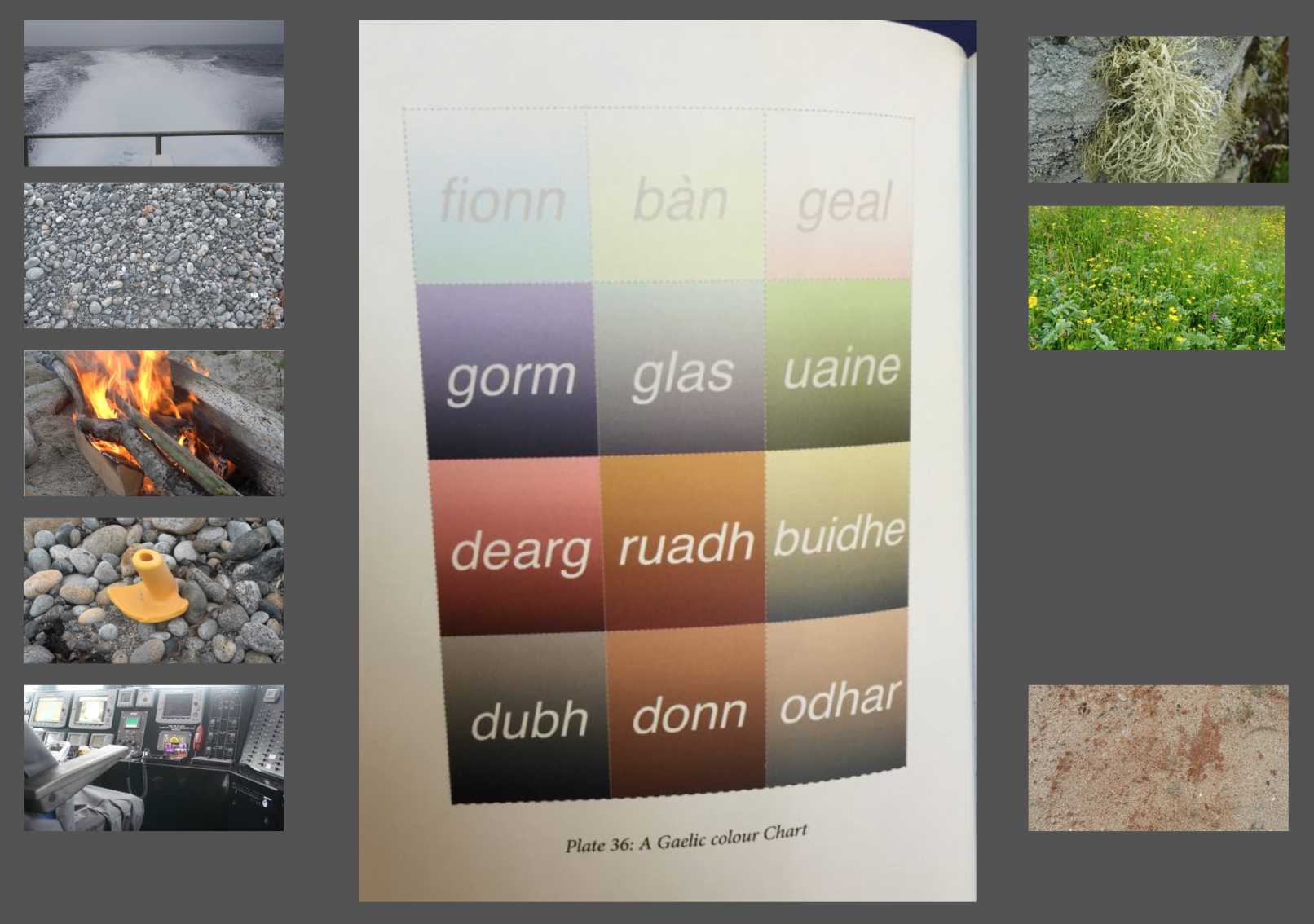

During the discovery phase of the project, Lauren deeply reconnected with the Gaelic language: Not having spoken Gaelic regularly in years, she was particularly intrigued by the way Gaels describe colours in terms of an object’s materiality. But she also learned that many of the nuances Gealic colour words offer are no longer used in modern Gaelic. Seeing how the spoken language had changed, inspired us to explore speech in the context of emerging technologies.

Siri, Google, Alexa – these voice controlled virtual assistants have become extremely popular in recent years, whether it’s on our phones, speakers, or other smart devices in our homes. With these sophisticated AI-powered systems in mind, we started wondering whether we could train a simple machine learning system to recognise Gaelic words. How would the system evolve once we added different voices and accents to the database?

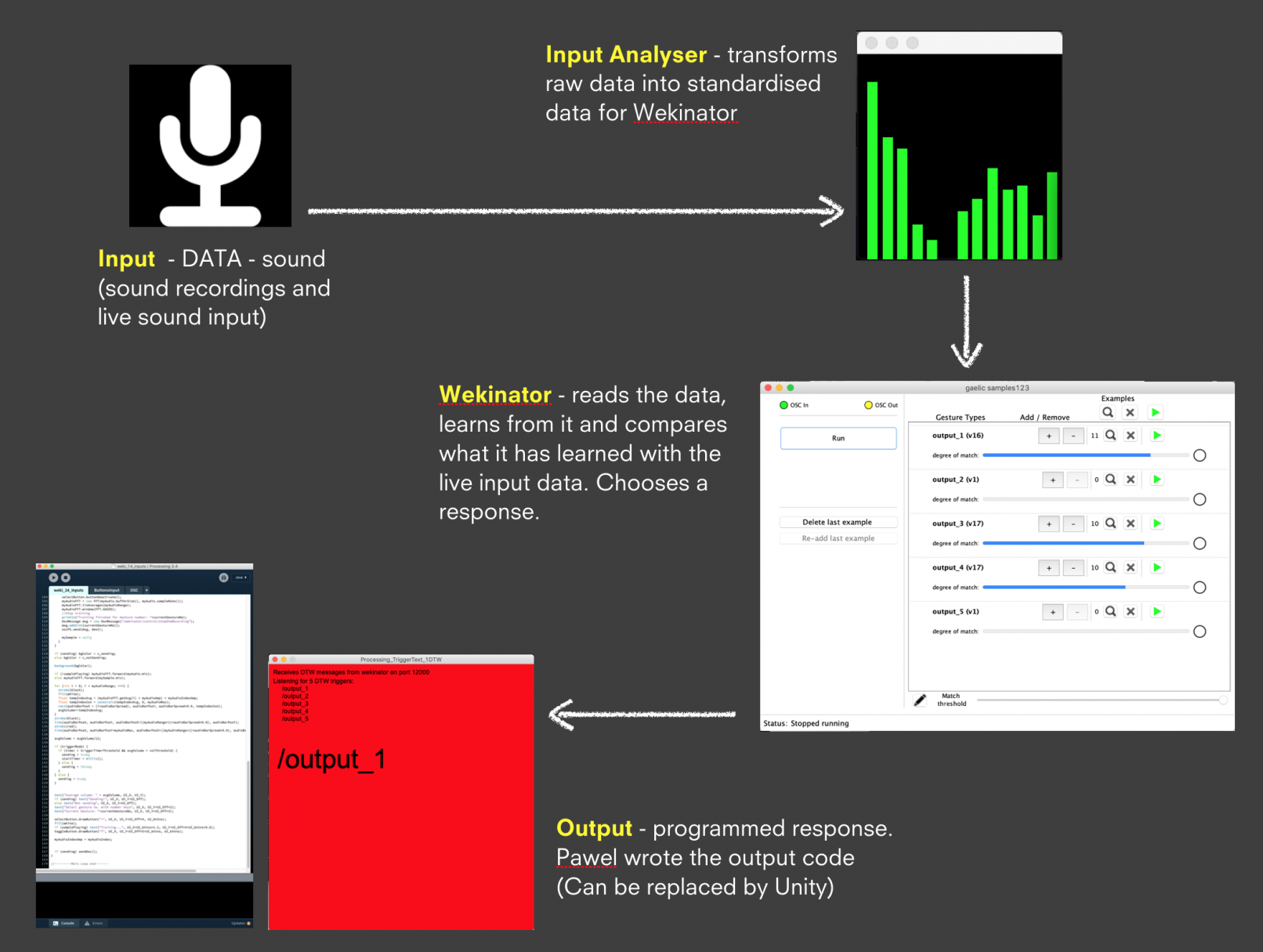

With a tight project timeline, we started looking at tools that would quickly get us started with machine learning: In the end we chose Wekinator, an open source software targeted towards musicians, artists and creators. Wekinator allowed us to rapidly develop different interactive machine learning systems without the need to write any code. We soon had a working prototype consisting of three parts: A bespoke Processing app performs audio analysis on microphone input or pre-recorded audio samples and forwards processed data to Wekinator. Wekinator then compares incoming data with its training data to detect possible matches. It then sends the result of the comparison to Unity, where a visual response is generated.

We experimented with different machine learning techniques in Wekinator (regression, classification, dynamic time warping) and found that classification worked best for what we wanted to achieve. We also experienced the importance of good signal processing: In order to create useful data for Wekinator, we picked the best microphone at our disposal and tweaked our Processing code numerous times to improve results.

With an improved system in place, we continued to feed training data into the machine learning model, using a variety of male and female voices, different accents, Gaelic and non-Gaelic speakers, native and non-native English speakers. Ultimately, mispronunciations wrongly trained the AI, diluting the training data and making recognition more difficult.

Outcome

Reverting to a small, selective dataset, we publicly launched Dath | Dah at Merchant City Festival. Participants engaged in a 10-minute solo interactive experience and data gathering exercise, where we recorded their attempts at pronouncing 5 Gaelic colour words. Those recordings are stored for future expansion of the work and analysis of participants’ scores was shared at the Royal Conservatoire of Scotland Learning and Teaching Conference.