The Blue Apple Tree

Digitally enhanced theatre

Partners

National Theatre of Scotland

Kai Fischer

Simon Meek / The Secret Experiment

Challenge

How can virtual production techniques be used to help shape new live experiences for audience and performers?

Commissioned by National Theatre of Scotland we were paired with theatre director and set designer Kai Fisher to explore Kai’s early ideas around using tracking technologies to reveal new layers of visuals and offer visual prompts to actors.

We proposed a multidisciplinary team of Kai working with regular ISO collaborator and interactive storyteller Simon Meek with isoLABS research and development leads Verena Henn and Pawel Kudel to see if we could help to push the boundaries of digitally enhanced theatre across an intense week of workshops.

Process

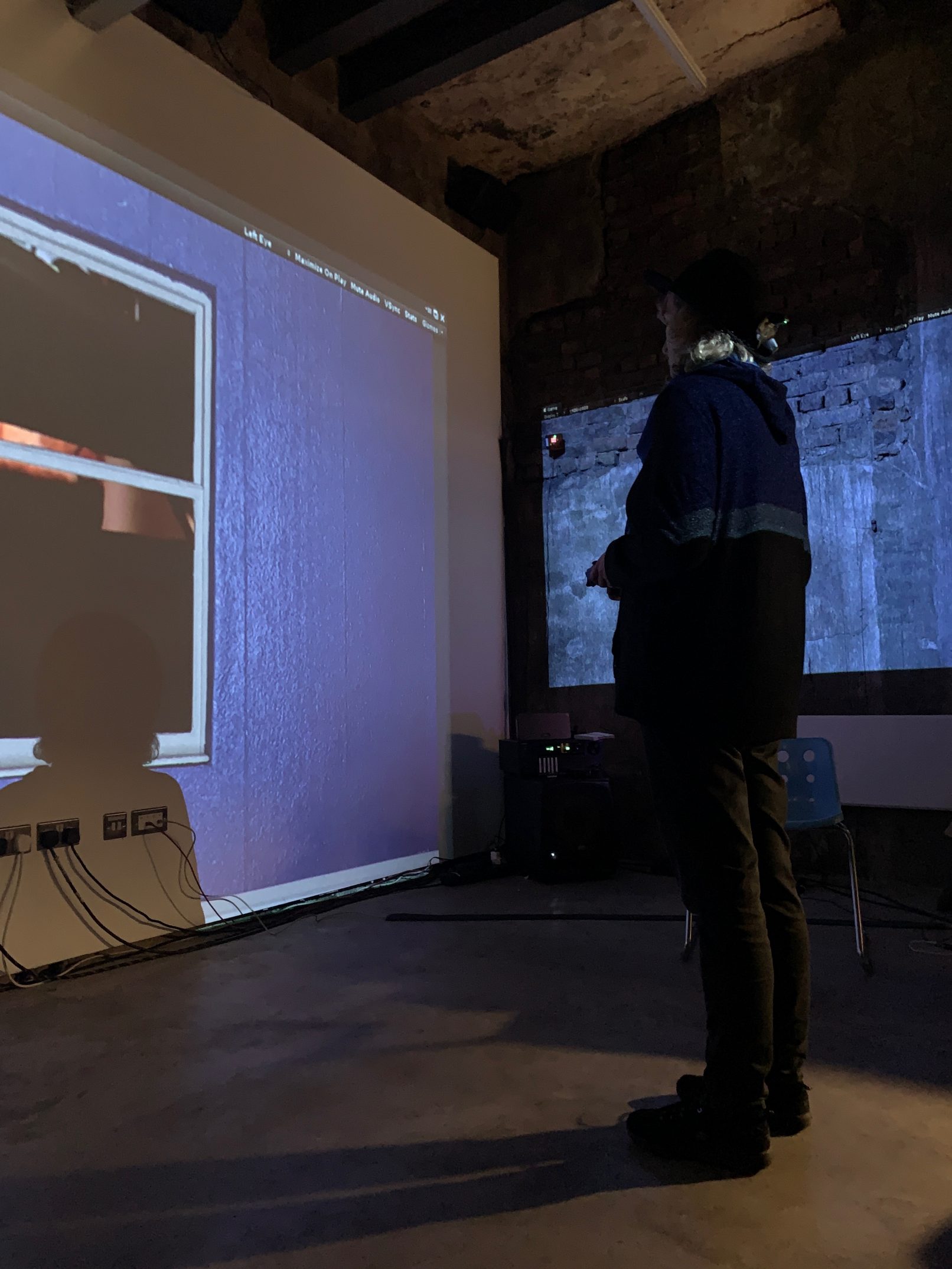

On our side, we came equipped with some rough ideas, knowledge of Unity as well as a VR headset, a few trackers, Rokoko Smartsuit and a screening room equipped with projectors. Kai brought experience of working on plays with various actors – and a plethora of ideas and experiments aimed at improving the workflows stage creation.

Before we started the workshops we met for a ‘show and tell’ presentation introducing everyone’s perspectives and knowledge, as well as what we expect from the next 5 days of work. Ordered by priority and (assumed) difficulty, we wanted to begin by experimenting with Vive Tracker-based head and gaze tracking.

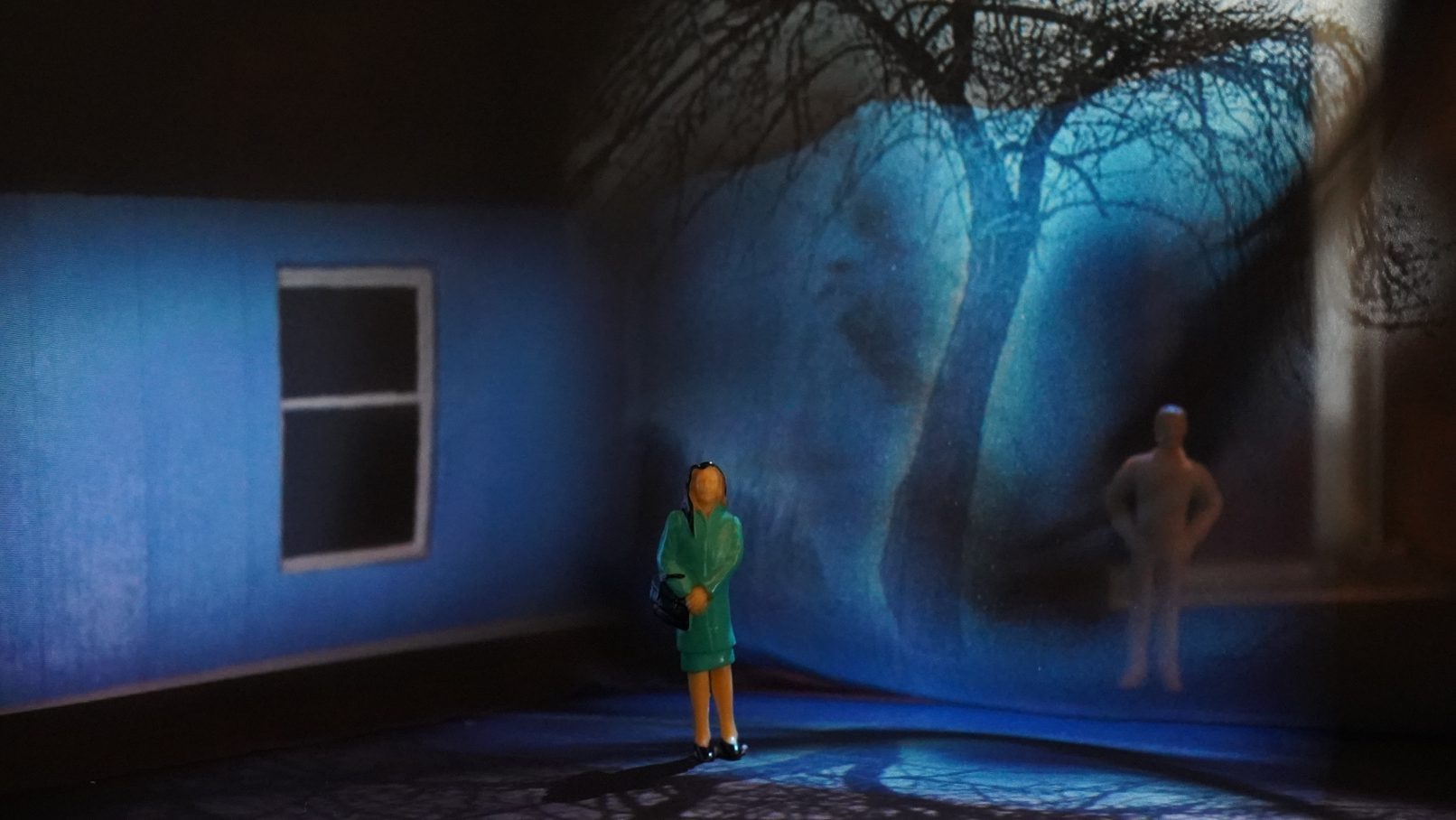

As a preparation, Kai made a physical model representing the stage he had in mind. Next step was to take it to realtime 3D – which was surprisingly very different from “architectural” 3D. The drawings, file formats and software he used were aimed at building an accurate physical set, while what we needed was a model that can be fed into Unity. Either way, an interesting first hurdle and learning experience.

We also got a few projectors to play around with. The high quality ultra short throws Kai brought in were great, but lacked the freedom of standard projectors. Interestingly, the hardware we ended up using the most were the tiny portable mini ‘pico’ projectors that Kai brought to illuminate his set model. They were infinitely easier to rig up in ways we required, and even though they lacked the brightness and resolution, in a project like this it’s all about flexibility and ease of use.

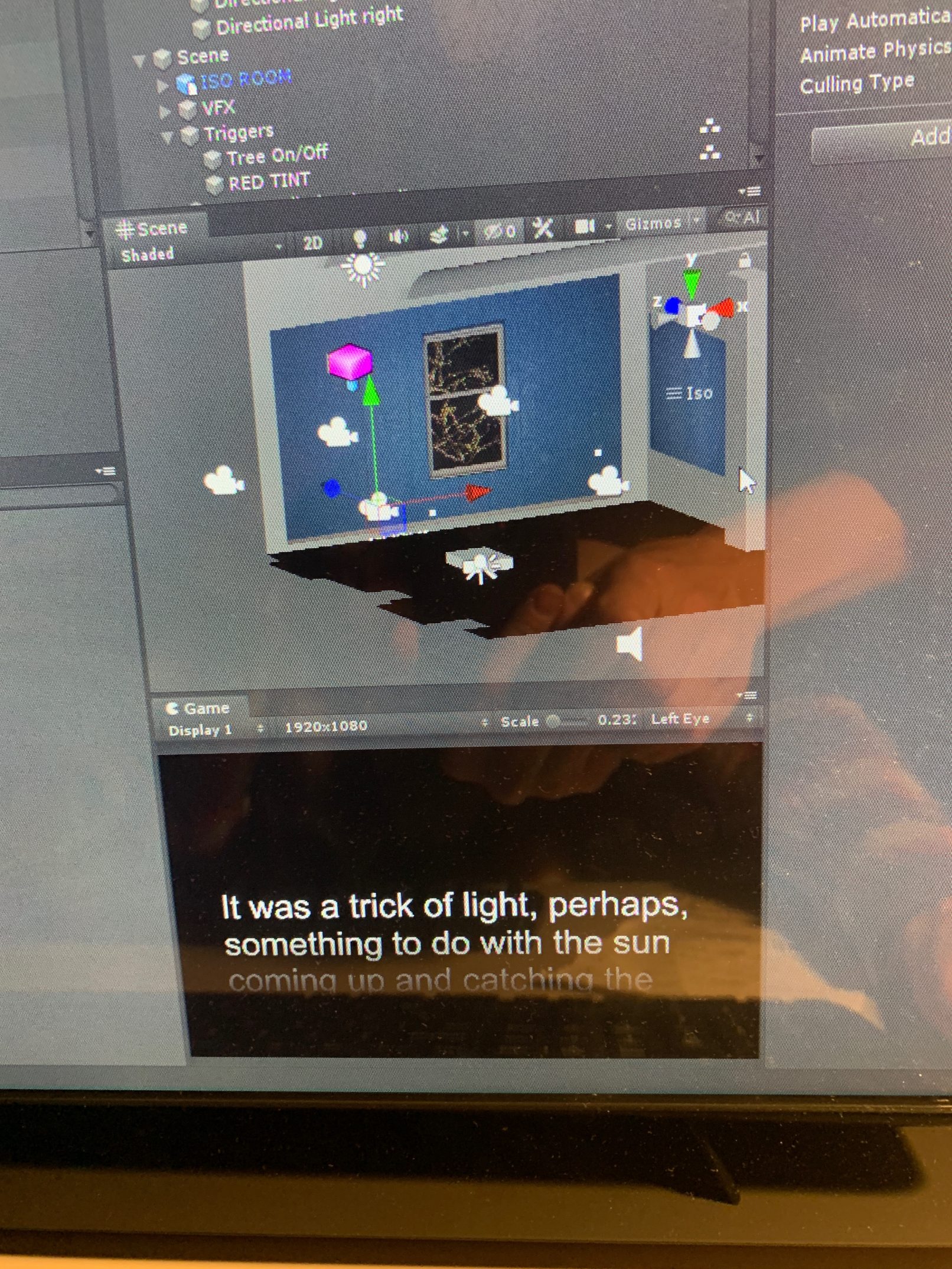

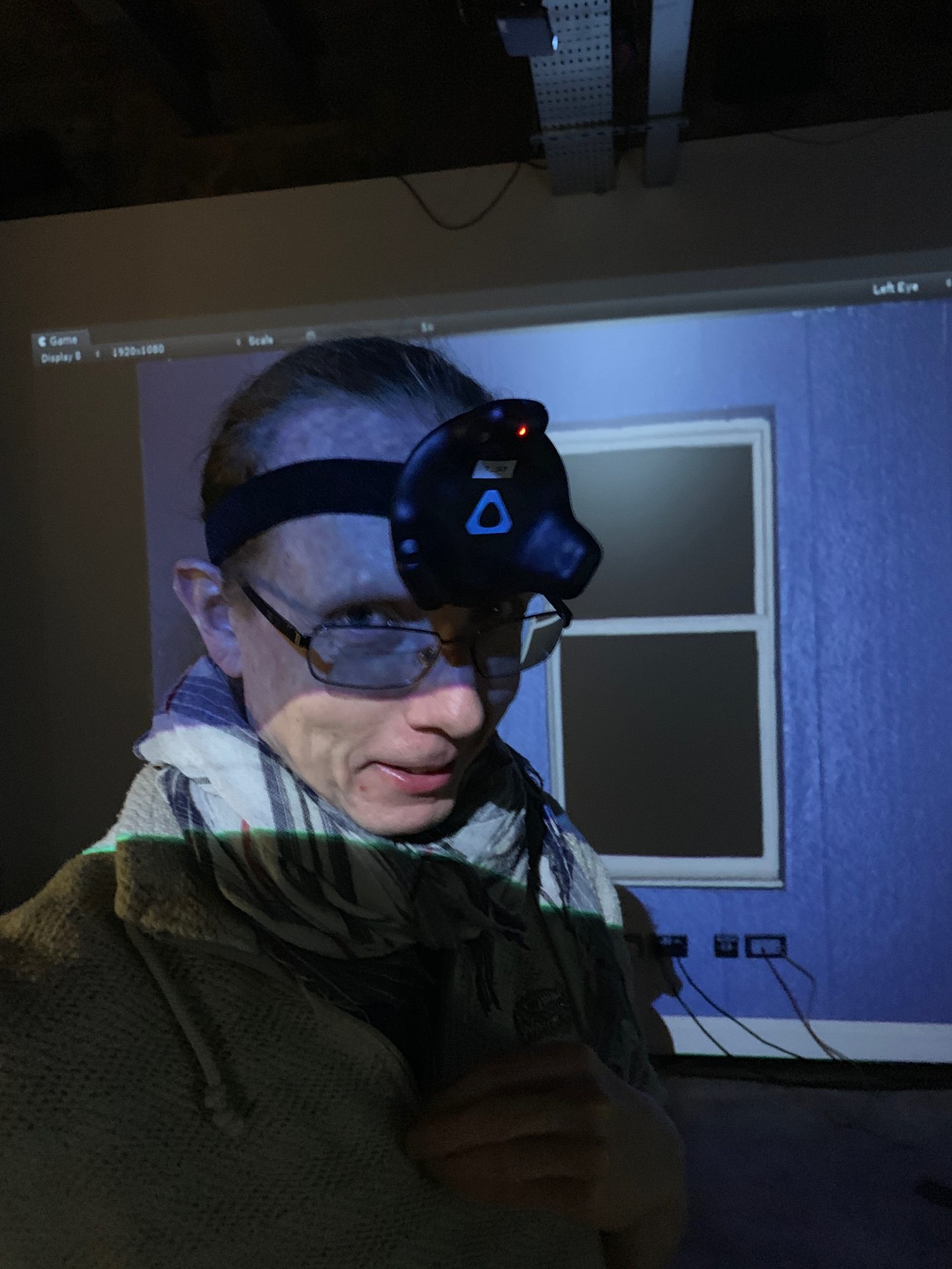

With the models sorted out, we started testing the trackers. The idea was to utilise an accurate and fairly low-profile Vive trackers, which normally are used in conjunction with the VR headset (which we omitted in this case). That sensor, when stably attached to the head, would provide us with enough positional and rotational data to imitate gaze tracking. We then tried out a few of Kai and Simon’s collaborative ideas:

– An interactive stage – Based on the actor’s position and where they look we could automatically trigger events – such as the projection changing it’s output as the actor approaches a prop

– Two-layered stage – The actor would cast a “light”, or a projection, wherever they looked, changing the projected scenography into its alternate version – which worked quite well, considering that one of the characters in the play was looking at the world from a different perspective.

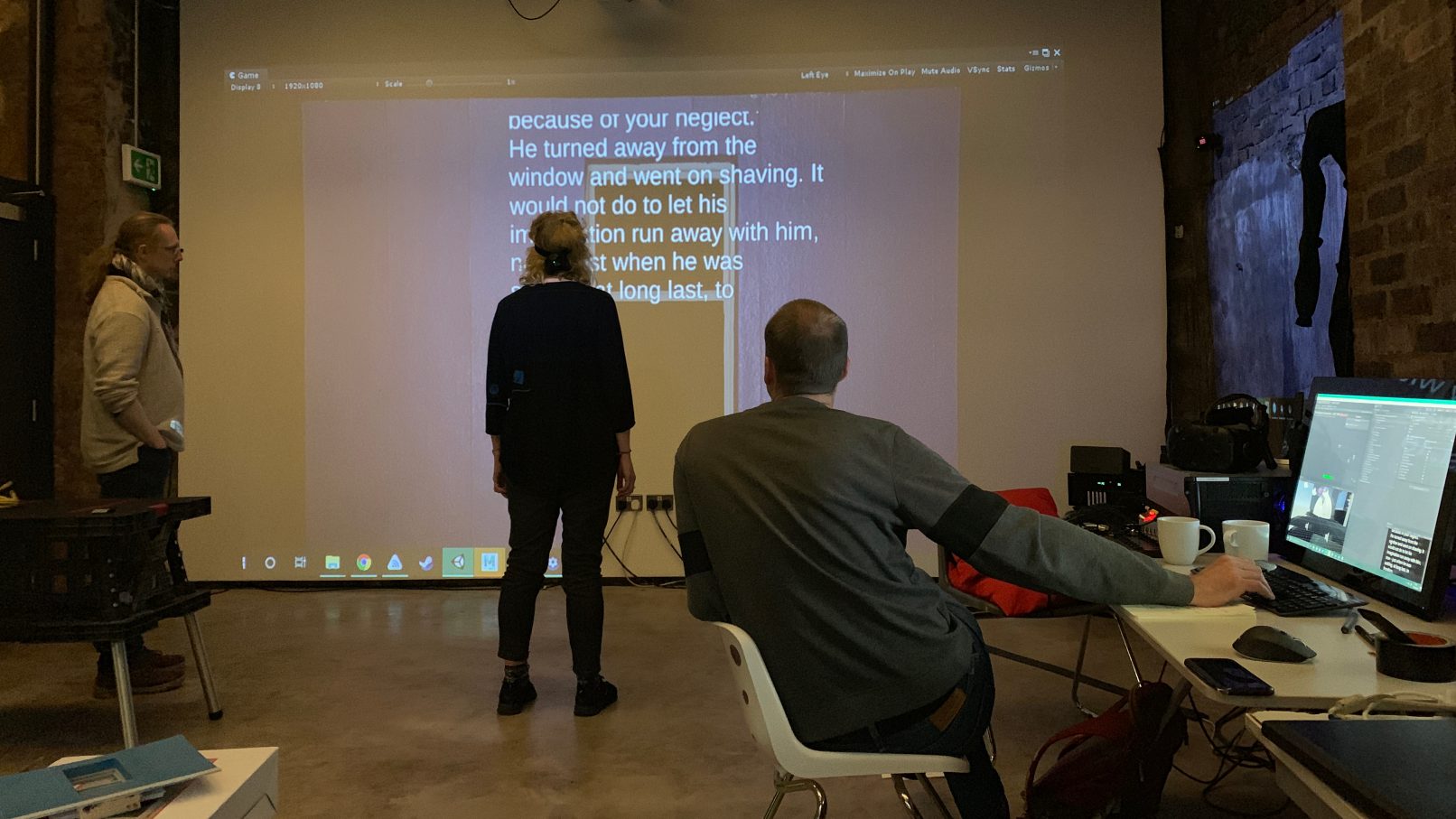

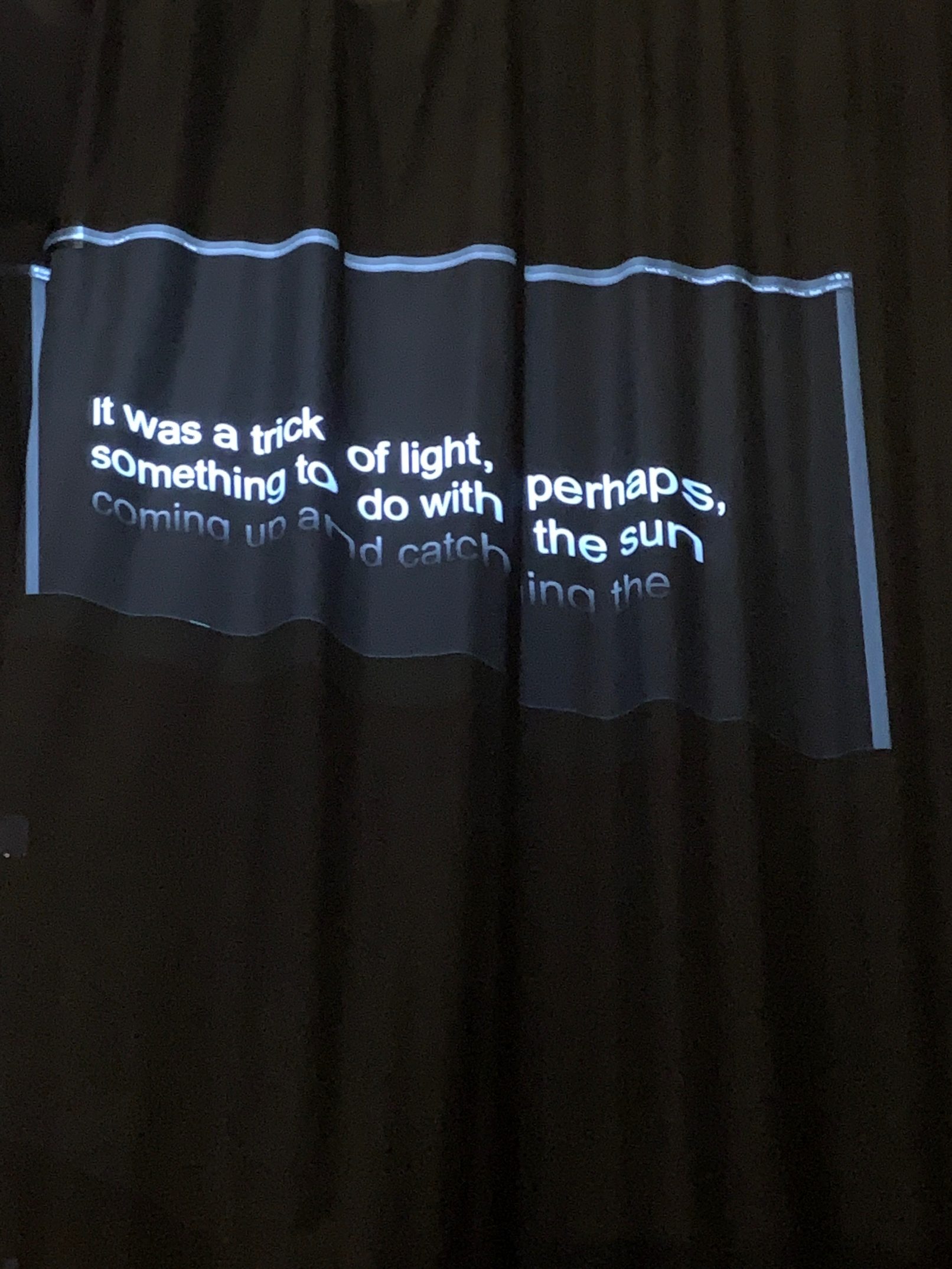

– Scene-integrated prompter – Actors would be able to remotely cast a relevant part of the script onto any projected surface, allowing them to remind themselves the lines without looking at a specific place (which would otherwise contains some sort of teleprompter). This was something Kai really wanted to focus on, since one of the goals of the workshops was to figure out ways to enable older actors to act without worrying about forgetting the script.

As a side idea, Kai wanted to try tracking actors’ fingers to allow them to draw on projections. Verena has used her experience to setup a demo using a Leap Motion sensor, which did just that. The tricky part would be to implement the sensor into the scene – something we decided to think about at another time.

Last two days were split into testing what we’ve already made with the actress, as well as constant refining of the code and functionality in Unity. It was a back-and-forth between Kai, Simon and Margaret, constantly feeding back and refining the line-reminding experiments. At the same time Pawel was patching holes in Unity scripts to ensure the looming show and tell goes smoothly.

Outcome

5 days in we were finished with what we planned to look at (as well as a couple of additional tests). All was contained within a single Unity project and few different scenes – which, including a live demo, was presented during a full capacity NTS presentation to theatre makers and guests in ISO’s demonstration space.

There was also so much that we only had a chance to talk about and consider – how all that we’ve done could be implemented in actual theatres, whether it’s something for rehearsals or live plays, and so on.

The workflows created have allowed us to extend this into a series of demos for micro-projects, and have extended our experience in virtual production techniques. One of our biggest takeaways though was learning from Kai about the processes of the set designer, the importance of physical models and also the role of the director in not only guiding his performers but also to be sensitive to the support they may require to deliver their work at the highest level.